The REPL Is Dead. Long Live the Factory.

This is a companion piece to my vibe coding devlog series. This is my bold prediction for 2026.

A 2025 randomized controlled trial by METR found that AI coding tools made experienced developers 19% slower, not faster. Developers predicted a 24% speedup. ML experts predicted 38%. The actual result? A slowdown. On real tasks. With frontier models.

The problem isn't the models. It's the execution pattern.

My prediction for 2026: The year of the orchestrator. The REPL pattern that powers every AI coding tool will evolve, or be replaced by tools that have.

The REPL isn't dead. It's incomplete. Add orchestration and you get something that scales. The same operational discipline that made infrastructure reliable (treat unreliable components as manageable, wrap them in observability and automation) applies directly to AI agents. We did it with servers. We did it with containers. Now we're doing it with AI agents.

Steve Yegge's Gas Town confirmed what the METR data suggests: the REPL is an incomplete abstraction. The right one looks like Kubernetes: a control plane orchestrating ephemeral workers against a declarative state store. 12-Factor AgentOps applies the same infrastructure patterns to AI workflows.

This article bridges the gap between where most people are (REPL loops) and where the tooling is headed (orchestrated agent factories).

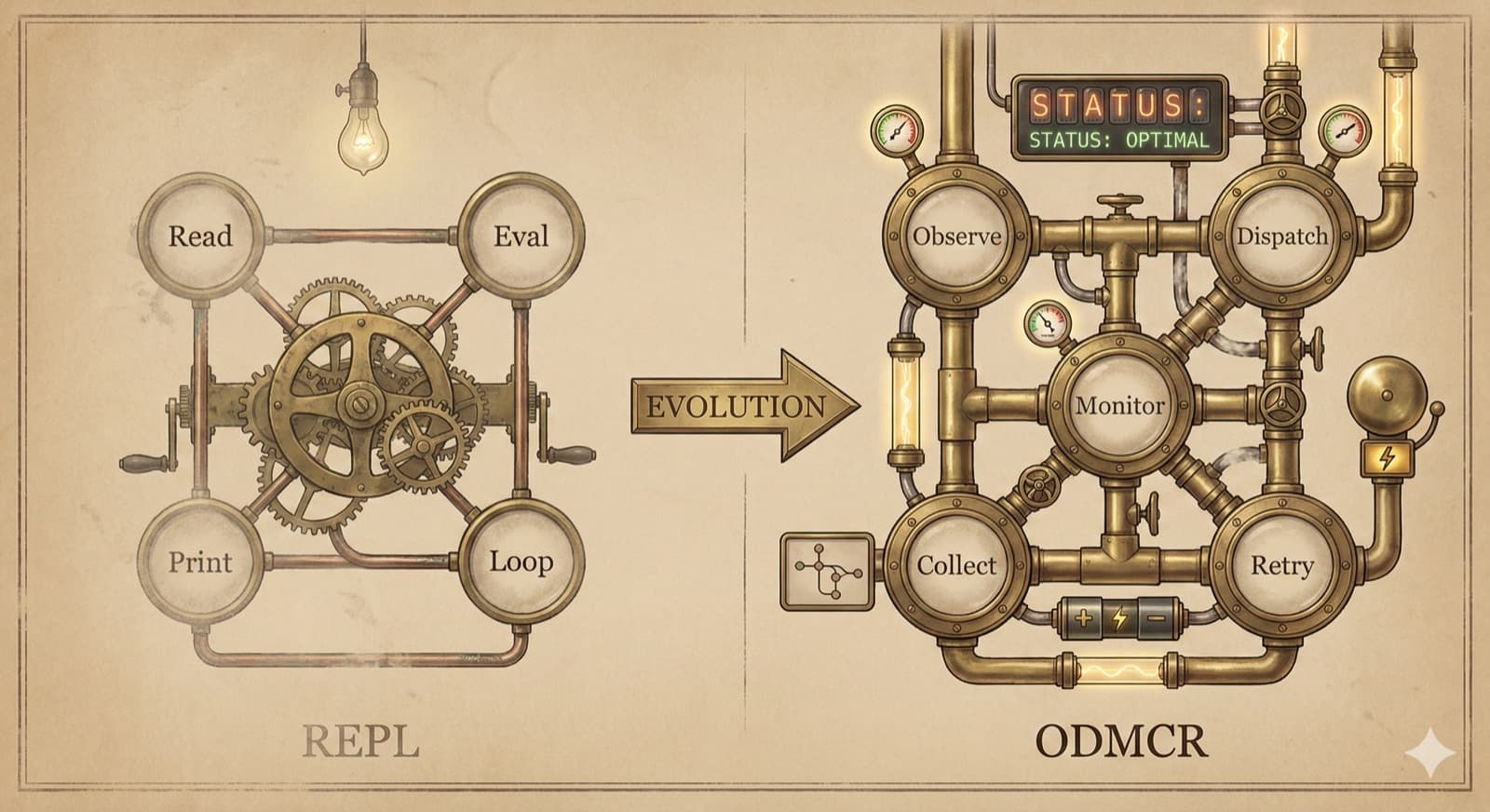

Every agentic coding tool in 2025 was built on the same foundation: the REPL. Read-Eval-Print-Loop. It's elegant. It's simple. It doesn't scale, not without help.

If you've run an agent loop overnight and woken up to hundreds of dollars in burned tokens, you already know something is broken. If you think the REPL pattern is the endgame for AI coding, grab a seat.

The Pattern Everyone Loves

The agent execution loop has become gospel. Prepare context, call model, handle response, iterate until done. Every major player uses some version of it: Replit Agent, GitHub Copilot agent mode, Claude Code, Cursor. Victor Dibia's explainer captures the pattern well.

Geoffrey Huntley's Ralph pattern turned it into a meme. A bash loop that keeps feeding the agent until tests pass. Simple. Viral. I was a Ralph power user. I ran it overnight. I felt very clever.

Then the quality collapsed.

The 50 First Dates Problem

Here's the failure mode nobody warns you about: the agent spirals on the same bug for hours, confident it's making progress. It'll try the same fix seventeen times with minor variations, each time expecting different results.

It's not insanity. It's optimism without memory.

The REPL pattern is stateless by design. Every iteration starts fresh. The loop doesn't know what already failed. It's Drew Barrymore waking up every morning in 50 First Dates, except instead of falling in love with you, it's falling in love with solutions that already didn't work.

If you've ever played a roguelike without save states, you know this feeling. Die on floor 47, start over from floor 1. Except the agent doesn't even know it died.

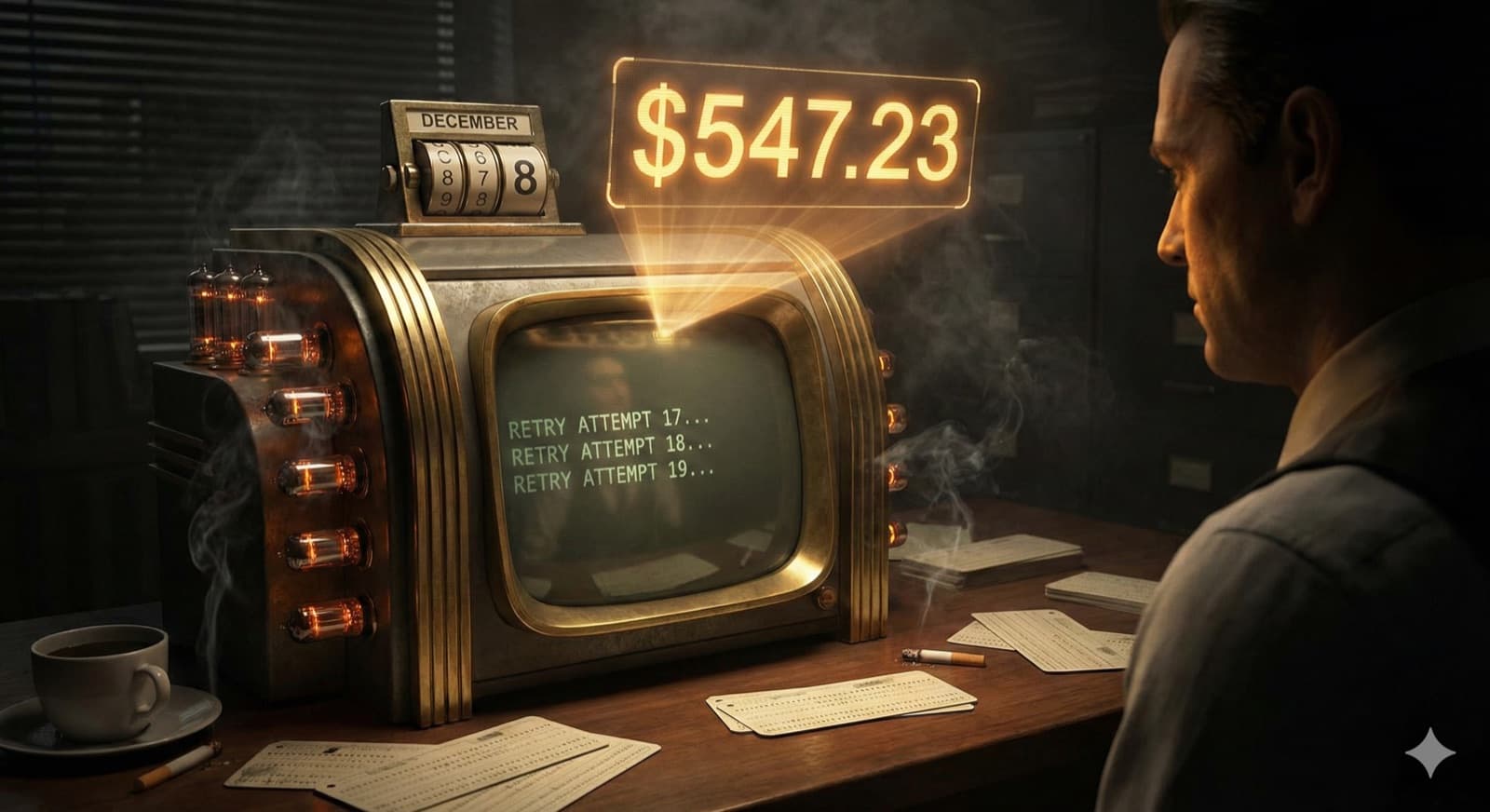

One day cost $547 in burned tokens. This is what it looked like.

The Architectural Problem

OK, so you add state. Memory. Persistence. That helps with single-agent work.

But here's what happens at scale. You run 3+ agents. They all need coordination. The coordinator needs to know what each agent is doing.

Classic multi-agent pattern: workers report back to a boss. Every result flows into the coordinator's context.

Math time (the old way):

- 8 agents

- 10K tokens each

- 80K tokens consumed in the coordinator

The boss becomes the bottleneck. Context fills up. Performance degrades. The expensive model (the one doing the coordination) chokes on data it doesn't need.

SWE-Bench Pro quantified the complexity cliff. On simple single-file tasks, top models score 70%+. On realistic multi-file enterprise problems? GPT-5 and Claude Opus 4.1 drop to 23%. The performance cliff is brutal. Beyond 3 files, even frontier models struggle. Beyond 10 files, open-source alternatives hit near-zero. The study tests model capability, not execution patterns, but it explains why decomposition matters. Smaller tasks mean smaller contexts mean staying on the good side of the cliff.

I built this multi-agent coordinator before I found Gas Town. I called it "the kraken." I should have called it "the money pit."

The Factory Analogy

Steve nailed it in The Future of Coding Agents: coding agent shops think they've built workers. What they need is a factory.

A factory doesn't funnel all work product to a single manager who inspects every widget. A factory has:

- Workers who operate in isolation

- Status boards (not detailed reports)

- Quality gates (not real-time supervision)

- Graceful failure handling (not cascade failures)

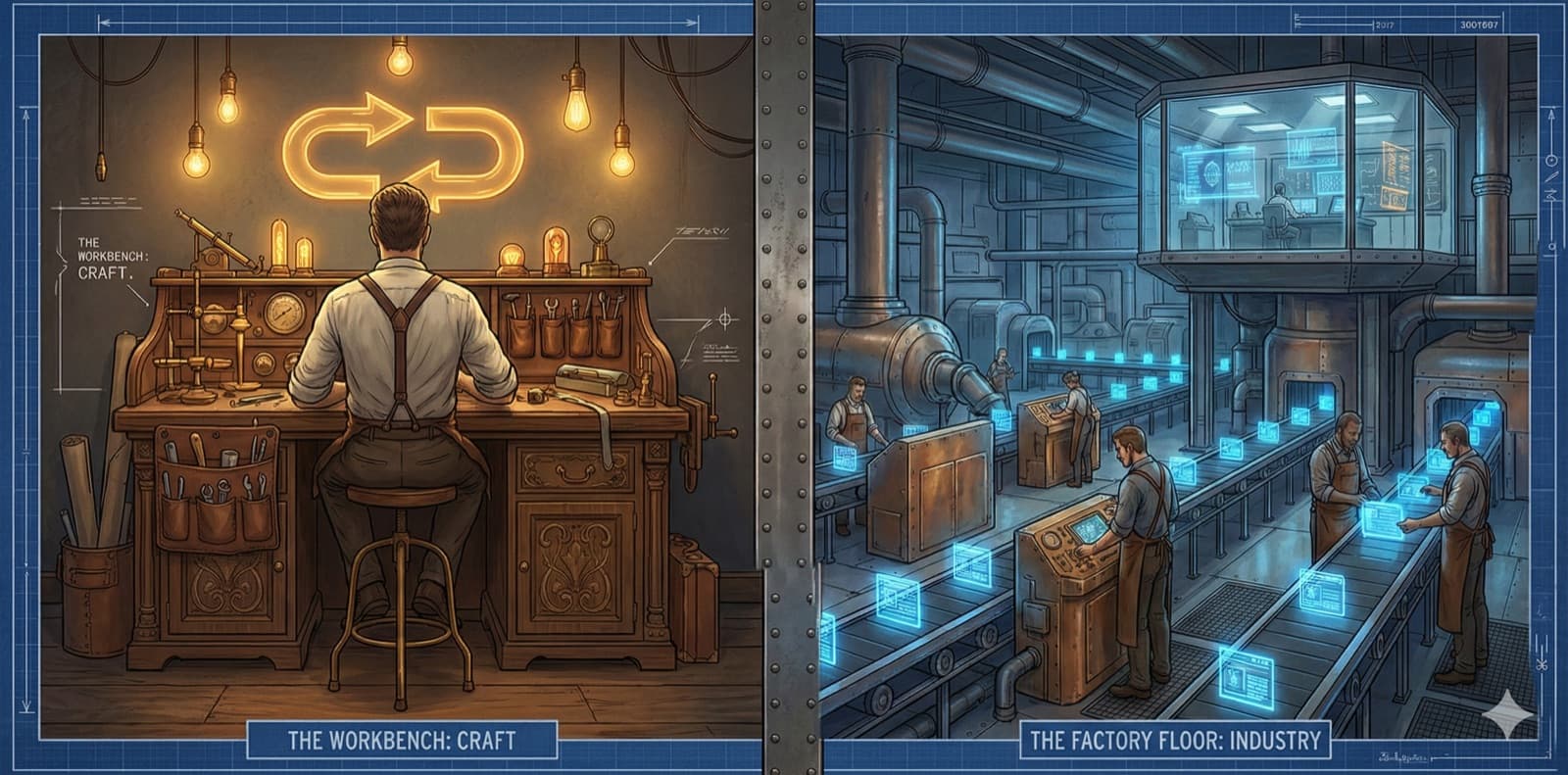

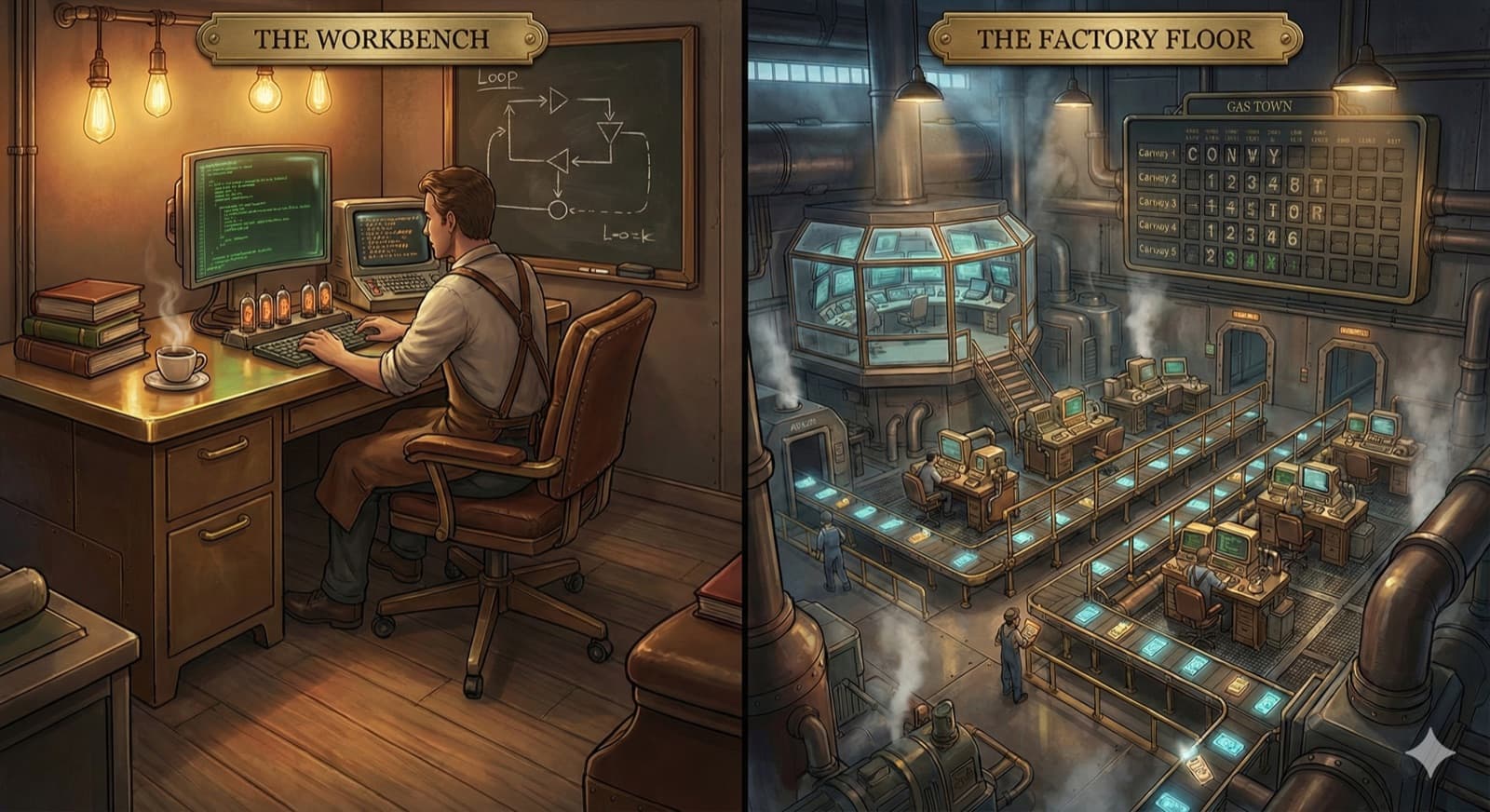

The REPL is a workbench. You stand at it. You do the work. It's fine for one person. Solo queue.

Gas Town is closer to a production line than a workbench. You manage capacity. You track throughput. You handle failures without stopping the line. It's the difference between soloing a dungeon and running it with a coordinated party. Same content, different throughput, different failure modes.

From REPL to ODMCR

Gas Town doesn't replace the REPL. It's what the REPL becomes when you add orchestration.

The insight: don't have agents return their work to a coordinator.

Each worker (Steve calls them "polecats") runs in complete isolation:

- Own terminal

- Own copy of the code

- Results go straight to git and a shared issue tracker

The coordinator (Mayor) just reads status updates from the tracker. It never loads the actual work. It doesn't know what the code looks like. It knows what the tickets look like.

It's managing work, not syntax.

What keeps it moving? Steve calls it GUPP, the Gastown Universal Propulsion Principle: "If there is work on your hook, YOU MUST RUN IT." Every worker has a persistent hook in the Beads database. Sling work to that hook, and the agent picks it up automatically. Sessions crash, context fills up, doesn't matter. The next session reads the hook and continues.

ODMCR: The Evolution

I've been running Gas Town for two weeks. The pattern that emerged is what I'm calling ODMCR: Observe-Dispatch-Monitor-Collect-Retry.

Underneath ODMCR is Steve's MEOW stack, Molecular Expression of Work. Beads (issues) chain into molecules (workflows), which cook from formulas (templates). Work becomes a graph of connected nodes in Git, not ephemeral context in a model's head. The workflow survives the session.

| REPL Phase | ODMCR Phase | What Changed |

|---|---|---|

| Read | Observe | Multi-source state discovery (issues, git, convoy status) |

| Eval | Dispatch | Async execution to polecats, not blocking |

| Monitor | Low-token polling (~100 tokens vs 80K) | |

| (implicit) | Collect | Explicit verification of claimed completions |

| (implicit) | Retry | Exponential backoff with escalation |

The coordinator stays light. ~750 tokens per iteration median, range 400-2,500 (estimated from Claude's token counter across 50 Mayor loops: reading convoy status, dispatching 1-2 issues, checking for blockers). The high end hits when multiple polecats report errors simultaneously. Sustainable for 8-hour autonomous runs at the median; spiky loads require headroom.

Steve calls the durability guarantee NDI, Nondeterministic Idempotence. The path through the workflow is unpredictable (agents crash, retry, take different routes), but the outcome converges as long as you keep throwing sessions at the hook. For unsolvable tasks, the system detects spiral patterns and escalates to human review. The pattern resembles Temporal's durable execution in intent, though without Temporal's enterprise hardening, achieved through Git-backed state instead of event sourcing.

REPL assumes you're present. ODMCR assumes you're not.

The 40% Rule

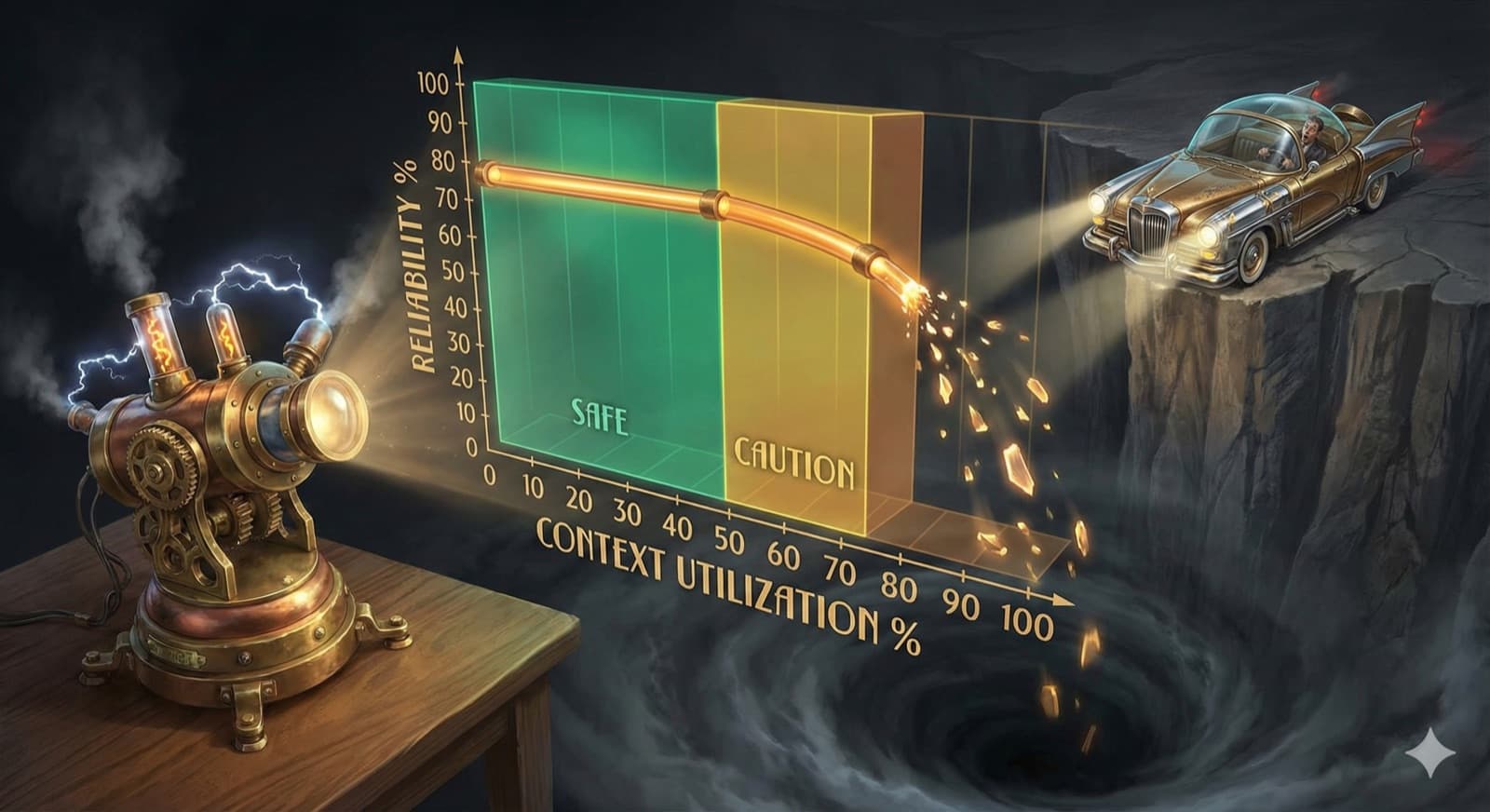

Never exceed 40% context utilization. Chroma's research shows LLMs don't degrade gracefully. They hit cliffs. A model advertising 200K tokens typically fails around 130K. My 40% threshold is conservative. I'd rather leave money on the table than watch an agent spiral.

The failure modes: context pollution, context confusion, context poisoning. Each bad token compounds. The model doesn't flag the degradation. It just starts producing confident garbage. By the time you notice, the whole session is unsalvageable.

Every REPL iteration compounds these losses. ODMCR sidesteps this: workers write to Git, not back to a coordinator's context. No summarization chain. The detail lives in the commit, not in compressed memory.

The Numbers

Two weeks. Personal projects across 8 repos. Week one: Ralph. Week two: Gas Town.

| Metric | Ralph (REPL) | Gas Town (ODMCR) |

|---|---|---|

| Issues closed | 203 | 1,005 |

| Multi-file changes | 34 (17%) | 312 (31%) |

| Rework rate | 31% | 18% |

| Cost per issue | $2.47 | $0.89 |

| Total spend | ~$501 | ~$894 |

| Human intervention | Constant (hourly) | 3x daily check-ins |

Yes, total spend went up. I bought more compute. 5x throughput for 1.8x cost is a trade I'd make again.

The per-issue efficiency is what compounds.

A typical auth endpoint (token validation + refresh + tests) was 1 issue in Week 1, 3 issues in Week 2. Week 1: ~45 minutes, 2 restarts from context bloat. Week 2: ~20 minutes across 3 parallel polecats, no restarts. Same feature. Faster to working code.

Here's what the metrics don't capture: the knowledge flywheel. Every closed issue becomes a searchable pattern. Every formula that works gets templated. After two weeks: 47 reusable formulas, 1,005 issues of searchable history. When I start a new task, I search past work first. The system doesn't just execute. It accumulates.

Throughput is a flow metric. Accumulated patterns are stock that compounds.

n=1. Personal projects. Not controlled. Week 2 benefited from Week 1 learnings, and task complexity varied. Directional, not definitive.

When It Fails

Gas Town isn't magic. The failure modes I've hit:

- Retry spirals: Polecat gets stuck, burns tokens. Fix: validation gates that escalate to human review.

- Merge conflicts: Two polecats touch the same file. Fix: one file, one owner.

- False completions: Agent claims "done" when it's not. Fix: explicit verification, not trust.

Every system fails. The question is how it fails. REPL failures cascade. One bad context poisons everything. ODMCR failures isolate. You lose one polecat's work, not the whole convoy.

Why Not Just Wait?

Models improve fast. Claude 5 might handle multi-file tasks natively. Why build orchestration complexity?

Here's the thing: the problem isn't model intelligence. It's architecture.

TSMC doesn't make one giant chip. They make billions of small ones with extreme precision. "But code isn't standardized widgets!" True. The decomposition is the hard part: breaking "design auth system" into "add JWT validation to middleware," "create token refresh endpoint," "write integration tests." That's what the issue tracker and workflow templates are for. The factory pattern (decomposition, parallelism, quality gates) scales regardless of how good individual components get.

Important caveat: not all work decomposes cleanly. Research spikes, architectural explorations, and genuinely novel algorithms resist templating. The factory pattern works for production code (CRUD endpoints, test coverage, refactoring), not R&D. I still use single-agent REPL for exploratory work where the goal is understanding, not output. The factory is for when you know what to build; the workbench is for figuring out what to build.

Better models won't eliminate the need for orchestration. They'll make orchestration more powerful. A factory of Opus 5 agents will outperform a single Opus 5 agent the same way a factory of Opus 4.5 agents outperforms a single one today.

The pattern is the moat, not the model. And the flywheel (1,005 searchable issues, 47 reusable formulas) is the moat that compounds.

Try It

The stack:

- Beads - Git-backed issue tracking. The shared memory that survives sessions.

- Gas Town - Orchestration system. The factory.

- Vibe Coding - Gene Kim & Steve. The philosophy.

Steve built the system. I'm documenting what I'm learning.

The Prediction

The REPL got us here. Orchestration gets us where we're going.

Steve predicts 2026 brings a new class of "100x engineer," people who've figured out how to wield agent orchestrators effectively. The bottleneck isn't the agents. It's how fast humans can review code. The game isn't "more agents." It's better orchestration of the agents you have.

By end of 2026, at least two major players (Cursor, Copilot, Claude Code, Replit, Windsurf, or something new from Google or OpenAI) will ship orchestration primitives: multi-agent coordination, persistent workflow state, or declarative work queues. The single-agent REPL will still exist for simple tasks. Serious production use will look closer to ODMCR.

The winning pattern won't be the smartest model. It'll be the best orchestration of whatever models exist.

The natural next step: Kubernetes-style operators for agent orchestration. Declare your desired state, let the operator reconcile. The CLI is the proving ground, the kubectl before the control plane.

We've done this before. DevOps taught us how to make unreliable things reliable. AI agents are just the newest unreliable component.

The foundation: 12-Factor AgentOps, infrastructure patterns for AI workflows. The journey: my vibe coding devlog series has the failure log, the Ralph collapse, and the practical .claude/ setup. This piece is the prediction.